Writable getters

Setters removing themselves are reminiscent of Ouroboros, the serpent eating its own tail, an ancient symbol. Media credit

A pattern that has come up a few times in my code is the following: an object has a property which defaults to an expression based on its other properties unless it’s explicitly set, in which case it functions like a normal property. Essentially, the expression functions as a default value.

Some examples of use cases:

- An object where a default

idis generated from itsnameortitle, but can also have custom ids. - An object with information about a human, where

namecan be either specified explicitly or generated fromfirstNameandlastNameif not specified. - An object with parameters for drawing an ellipse, where

rydefaults torxif not explicitly set. - An object literal with date information, and a

readableproperty which formats the date, but can be overwritten with a custom human-readable format. - An object representing parts of a Github URL (e.g. username, repo, branch) with an

apiCallproperty which can be either customized or generated from the parts (this is actually the example which prompted this blog post)

Ok, so now that I convinced you about the utility of this pattern, how do we implement it in JS?

Position Statement for the 2020 W3C TAG Election

Update: I got elected!! Thank you so much to every W3C member organization who voted for me. 🙏🏼 Now on to making the Web better, alongside fellow TAG members!

Context: I’m running for one of the four open seats in this year’s W3C TAG election. The W3C Technical Architecture Group (TAG) is the Working Group that ensures that Web Platform technologies are usable and follow consistent design principles, whether they are created inside or outside W3C. It advocates for the needs of everyone who uses the Web and everyone who works on the Web. If you work for a company that is a W3C Member, please consider encouraging your AC rep to vote for me! My candidate statement follows.

Hi, I’m Lea Verou. Equally at home in Web development, the standards process, and programming language design, I bring a rarely-found cross-disciplinary understanding of the full stack of front-end development.

I have a thorough and fundamental understanding of all the core technologies of the Web Platform: HTML, CSS, JS, DOM, and SVG. I bring the experience and perspective of having worked as a web designer & developer in the trenches — not in large corporate systems, but on smaller, independent projects for clients, the type of projects that form the majority of the Web. I have started many open source projects, used on millions of websites, large and small. Some of my work has been incorporated in browser dev tools, and some has helped push CSS implementations forwards.

However, unlike most web developers, I am experienced in working within W3C, both as a longtime member of the CSS Working Group, as well as a W3C Staff alumnus. This experience has given me a fuller grasp of Web technology development: not just the authoring side, but also the needs and constraints of implementation teams, the kinds of problems that tend to show up in our work, and the design principles we apply. I understand in practice how the standards process at W3C addresses the problems and weighs up the necessary compromises — from high-level design changes to minute details — to create successful standards for the Web.

I have spent over six years doing PhD research at MIT on the intersection of programming language design and human-computer interaction. My research has been published in top-tier peer-reviewed academic venues. My strong usability background gives me the ability to identify API design pitfalls early on in the design process.

In addition, I have been teaching web technologies for over a decade, both to professional web developers, through my numerous talks, workshops, and bestselling book, and as an instructor and course co-creator for MIT. This experience helps me to easily identify aspects of API design that can make a technology difficult to learn and conceptualize.

If elected, I will work with the rest of the TAG to:

- Ensure that web technologies are not only powerful, but also learnable and approachable, with a smooth ease-of-use to complexity curve.

- Ensure that where possible, commonly needed functionality is available through approachable declarative HTML or CSS syntax and not solely through JS APIs.

- Work towards making the Web platform more extensible, to allow experienced developers to encapsulate complexity and make it available to novice authors, empowering the latter to create compelling content. Steps have been made in this direction with Web Components and the Houdini specifications, but there are still many gaps that need to be addressed.

- Record design principles that are often implicit knowledge in standards groups, passed on but never recorded. Explicit design principles help us keep technologies internally consistent, but also assist library developers who want to design APIs that are consistent with the Web Platform and feel like a natural extension of it. A great start has been made with the initial drafts of the Design Principles document, but there is still a lot to be done.

- Guide those seeking TAG review, some of whom may be new to the standards process, to improve their specifications.

Having worn all these hats, I can understand and empathize with the needs of designers and developers, authors and implementers, practitioners and academics, putting me in a unique position to help ensure the Web Platform remains consistent, usable, and inclusive.

I would like to thank Open JS Foundation and Bocoup for graciously funding my TAG-related travel, in the event that I am elected.

Selected endorsements

Tantek Çelik, Mozilla’s AC representative, longtime CSS WG member, and creator of many popular technologies:

I have had the privilege of working with Lea in the CSS Working Group, and in the broader web development community for many years. Lea is an expert in the practical real-world-web technologies of the W3C, how they fit together, has put them into practice, has helped contribute to their evolution, directly in specs and in working groups. She’s also a passionate user & developer advocate, both of which I think are excellent for the TAG.

Source: https://lists.w3.org/Archives/Member/w3c-ac-forum/2021JanMar/0015.html

Florian Rivoal, CSS WG Invited Expert and editor of several specifications, elected W3C AB member, ex-Opera:

Elika Etemad aka fantasai, prolific editor of dozens of W3C specifications, CSS WG member for over 16 years, and elected W3C AB member:

One TPAC long ago, several members of the TAG on a recruiting spree went around asking people to run for the TAG. I personally turned them down for multiple reasons (including that I’m only a very poor substitute for David Baron), but it occurred to me recently that there was a candidate that they do need: Lea Verou.

Lea is one of those elite developers whose technical expertise ranges across the entire Web platform. She doesn’t just use HTML, CSS, JS, and SVG, she pushes the boundaries of what they’re capable of. Meanwhile her authoring experience spans JS libraries to small site design to CSS+HTML print publications, giving her a personal appreciation of a wide variety of use cases. Unlike most other developers in her class, however, Lea also brings her experience working within W3C as a longtime member of the CSS Working Group.

I’ve seen firsthand that she is capable of participating at the deep and excruciatingly detailed level that we operate here, and that her attention is not just on the feature at hand but also the system and its usability and coherence as a whole. She knows how the standards process works, how use cases and implementation constraints drive our design decisions, and how participation in the arcane discussions at W3C can make a real difference in the future usability of the Web.

I’m recommending her for the TAG because she’s able to bring a perspective that is needed and frequently missing from our technical discussions which so often revolve around implementers, and because elevating her to TAG would give her both the opportunity and the empowerment to bring that perspective to more of our Web technology development here at W3C and beyond.

Source: https://lists.w3.org/Archives/Member/w3c-ac-forum/2020OctDec/0055.html

Bruce Lawson, Opera alumni, world renowned accessibility expert, speaker, author:

Brian Kardell, AC representative for both Open JS Foundation and Igalia:

The OpenJS Foundation is very pleased to nominate and offer our support for Lea Verou to the W3C TAG. We believe that she brings a fresh perspective, diverse background and several kinds of insight that would be exceptionally useful in the TAG’s work.

Lea Verou is another easy choice for me. Lea brings a really diverse background, set of perspectives and skills to the table. She’s worked for the W3C, she’s a great communicator to developers (this is definitely a great skill in TAG whose outreach is important), she’s worked with small teams, produced a number of popular libraries and helped drive some interesting standards. The OpenJS Foundation was pleased to nominate her, but Frontiers and several others were also supportive. Lea also deserves “high marks”.

The case for Weak Dependencies in JS

Earlier today, I was briefly entertaining the idea of writing a library to wrap and enhance querySelectorAll in certain ways. I thought I’d rather not introduce a Parsel dependency out of the box, but only use it to parse selectors properly when it’s available, and use more crude regex when it’s not (which would cover most use cases for what I wanted to do).

In the olden days, where every library introduced a global, I could just do:

if (window.Parsel) {

let ast = Parsel.parse();

// rewrite selector properly, with AST

}

else {

// crude regex replace

}

However, with ESM, there doesn’t seem to be a way to detect whether a module is imported, without actually importing it yourself.

I tweeted about this…

I thought this was a common paradigm, and everyone would understand why this was useful. However, I was surprised to find that most people were baffled about my use case. Most of them thought I was either talking about conditional imports, or error recovery after failed imports.

I suspect it might be because my primary perspective for writing JS is that of a library author, where I do not control the host environment, whereas for most developers, their primary perspective is that of writing JS for a specific app or website.

After Kyle Simpson asked me to elaborate about the use case, I figured a blog post was in order.

The use case is essentially progressive enhancement (in fact, I toyed with the idea of titling this blog post “Progressively Enhanced JS”). If library X is loaded already by other code, do a more elaborate thing and cover all the edge cases, otherwise do a more basic thing. It’s for dependencies that are not really dependencies, but more like nice-to-haves.

Simple pie charts with fallback, today

Five years ago, I had written this extensive Smashing Magazine article detailing multiple different methods for creating simple pie charts, either with clever use of transforms and pseudo-elements, or with SVG stroke-dasharray. In the end, I mentioned creating pie charts with conic gradients, as a future technique. It was actually a writeup of my “The Missing Slice” talk, and an excerpt of my CSS Secrets book, which had just been published.

I was reminded of this article today by someone on Twitter:

https://twitter.com/sam\_kent\_/status/1326805431390531584

I suggested conic gradients, since they are now supported in >87% of users’ browsers, but he needed to support IE11. He suggested using my polyfill from back then, but this is not a very good idea today.

Indeed, unless you really need to display conic gradients, even I would not recommend using the polyfill on a production facing site. It requires -prefix-free, which re-fetches (albeit from cache) your entire CSS and sticks it in a <style> element, with no sourcemaps since those were not a thing back when -prefix-free was written. If you’re already using -prefix-free, the polyfill is great, but if not, it’s way too heavy a dependency.

Pie charts with fallback (modern browsers)

Instead, what I would recommend is graceful degradation, i.e. to use the same color stops, but in a linear gradient.

We can use @supports and have quite an elaborate progress bar fallback. For example, take a look at this 40% pie chart:

.pie {

height: 20px;

background: linear-gradient(to right, deeppink 40%, transparent 0);

background-color: gold;

}

@supports (background: conic-gradient(white, black)) {

.pie {

width: 200px; height: 200px;

background-image: conic-gradient(deeppink 40%, transparent 0);

border-radius: 50%;

}

}

This is what it looks like in Firefox 82 (conic gradients are scheduled to ship unflagged in Firefox 83) or IE11:

Note that because @supports is only used for the pie and not the fallback, the lack of IE11 support for it doesn’t affect us one iota.

If relatively modern browsers are all we care about, we could even use CSS variables for the percentage and the color stops, to avoid duplication, and to be able to set the percentage from the markup:

<div class="pie" style="--p: 40%"></div>

.pie {

height: 20px;

--stops: deeppink var(--p, 0%), transparent 0;

background: linear-gradient(to right, var(--stops));

background-color: gold;

}

@supports (background: conic-gradient(white, black)) {

.pie {

width: 200px; height: 200px;

background-image: conic-gradient(var(–stops));

border-radius: 50%;

}

}

You can use a similar approach for 3 or more segments, or for a vertical bar.

One issue with this approach is that our layout needs to work well with two charts of completely different proportions. To avoid that, we could just use a square:

.pie {

width: 200px;

height: 200px;

background: linear-gradient(to right, deeppink 40%, transparent 0) gold;

}

@supports (background: conic-gradient(white, black)) {

.pie {

background-image: conic-gradient(deeppink 40%, transparent 0);

border-radius: 50%;

}

}

which produces this in IE11:

Granted, a square progress bar is not the same, but it can still convey the same relationship and is easier to design a layout around it since it always has the same aspect ratio.

Why not use radial gradients?

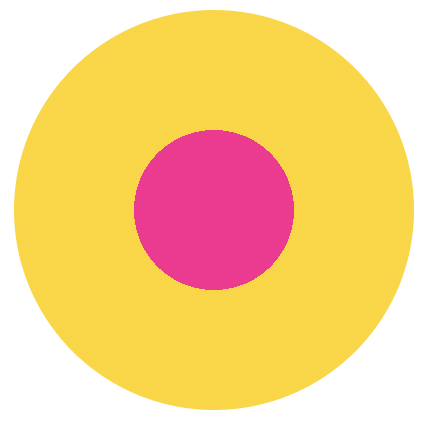

You might be wondering, why not just use a radial gradient, which could use the same dimensions and rounding. Something like this:

There are two problems with this. The first one may be obvious: Horizontal or vertical bars are common for showing the proportional difference between two amounts, albeit less good than a pie chart because it’s harder to compare with 50% at a glance (yes Tufte, pie charts can be better for some things!). Such circular graphs are very uncommon. And for good reason: Drawn naively (e.g. in our case if the radius of the pink circle is 40% of the radius of the yellow circle), their areas do not have the relative relationship we want to depict.

Why is that? Let r be the radius of the yellow circle. As we know from middle school, the area of the entire circle is π_r_², so the area of the yellow ring is π_r_² - (area of pink circle). The area of the pink circle is π(0.4_r_)² = 0.16π_r_². Therefore, the area of the yellow ring is π_r_² - 0.16π_r_² = 0.84π_r_² and their relative ratio is 0.16π_r_² / 0.84π_r_² = 0.16 / 0.84 ≅ 0.19 which is a far cry from the 40/60 (≅ 0.67) we were looking for!

Instead, if we wanted to draw a similar visualization to depict the correct relationship, we need to start from the ratio and work our way backwards. Let r be the radius of the yellow circle and kr the radius of the pink circle. Their ratio is π(kr)² / (π_r_² - π(kr)²) = 4/6 ⇒ _k_² / (1 - _k_²) = 4/6 ⇒ (1 - _k_²) / _k_² = 6/4 ⇒ 1/_k_² - 1 = 6/4 ⇒ 1/_k_² = 10/4 ⇒ k = 2 / sqrt(10) ≅ .632 Therefore, the radius of the pink circle should be around 63.2% of the radius of the yellow circle, and a more correct chart would look like this:

In the general case where the pink circle is depicting the percentage p, we’d want the radius of the pink circle to be sqrt(1 / p) the size of the yellow circle. That’s a fair bit of calculations that we can’t yet automate (though sqrt() is coming!). Moral of the story: use a bar as your fallback!

The --var: ; hack to toggle multiple values with one custom property

What if I told you you could use a single property value to turn multiple different values on and off across multiple different properties and even across multiple CSS rules?

What if I told you you could turn this flat button into a glossy skeuomorphic button by just tweaking one custom property --is-raised, and that would set its border, background image, box and text shadows in one fell swoop?

How, you may ask?

The crux of this technique is this: There are two custom property values that work almost everywhere there is a var() call with a fallback.

The more obvious one that you probably already know is the initial value, which makes the property just apply its fallback. So, in the following code:

background: var(--foo, linear-gradient(white, transparent)) hsl(220 10% 50%);

border: 1px solid var(--foo, rgb(0 0 0 / .1));

color: rgb(0 0 0 var(--foo, / .8));

We can set --foo to initial to enable these “fallbacks” and append these values to the property value, adding a gradient, setting a border-color, and making the text color translucent in one go. But what to do when we want to turn these values off? Any non-initial value for --foo (that doesn’t create cycles) should work. But is there one that works in all three declarations?

It turns out there is another value that works everywhere, in every property a var() reference is present, and you’d likely never guess what it is (unless you have watched any of my CSS variable talks and have a good memory for passing mentions of things).

Intrigued?

It’s whitespace! Whitespace is significant in a custom property. When you write something like this:

--foo: ;

This is not an invalid declaration. This is a declaration where the value of --foo is literally one space character. However, whitespace is valid in every CSS property value, everywhere a var() is allowed, and does not affect its computed value in any way. So, we can just set our property to one space (or even a comment) and not affect any other value present in the declaration. E.g. this:

--foo: ;

background: var(--foo, linear-gradient(white, transparent)) hsl(220 10% 50%);

produces the same result as:

background: hsl(220 10% 50%);

We can take advantage of this to essentially turn var() into a single-clause if() function and conditionally append values based on a single custom property.

As a proof of concept, here is the two button demo refactored using this approach:

Limitations

I originally envisioned this as a building block for a technique horrible hack to enable “mixins” in the browser, since @apply is now defunct. However, the big limitation is that this only works for appending values to existing values — or setting a property to either a whole value or initial. There is no way to say “the background should be red if --foo is set and white otherwise”. Some such conditionals can be emulated with clever use of appending, but not most.

And of course there’s a certain readability issue: --foo: ; looks like a mistake and --foo: initial looks pretty weird, unless you’re aware of this technique. However, that can easily be solved with comments. Or even constants:

:root {

--ON: initial;

--OFF: ;

}

button {

–is-raised: var(–OFF);

/* … */

}

#foo {

–is-raised: var(–ON);

}

Also do note that eventually we will get a proper if() and won’t need such horrible hacks to emulate it, discussions are already underway [w3c/csswg-drafts#5009 w3c/csswg-drafts#4731].

So what do you think? Horrible hack, useful technique, or both? 😀

Prior art

Turns out this was independently discovered by two people before me:

And it was called “space toggle hack” in case you want to google it!

The failed promise of Web Components

Web Components had so much potential to empower HTML to do more, and make web development more accessible to non-programmers and easier for programmers. Remember how exciting it was every time we got new shiny HTML elements that actually do stuff? Remember how exciting it was to be able to do sliders, color pickers, dialogs, disclosure widgets straight in the HTML, without having to include any widget libraries?

The promise of Web Components was that we’d get this convenience, but for a much wider range of HTML elements, developed much faster, as nobody needs to wait for the full spec + implementation process. We’d just include a script, and boom, we have more elements at our disposal!

Or, that was the idea. Somewhere along the way, the space got flooded by JS frameworks aficionados, who revel in complex APIs, overengineered build processes and dependency graphs that look like the roots of a banyan tree.

This is what the roots of a Banyan tree look like. Photo by David Stanley on Flickr (CC-BY).

Perusing the components on webcomponents.org fills me with anxiety, and I’m perfectly comfortable writing JS — I write JS for a living! What hope do those who can’t write JS have? Using a custom element from the directory often needs to be preceded by a ritual of npm flugelhorn, import clownshoes, build quux, all completely unapologetically because “here is my truckload of dependencies, yeah, what”. Many steps are even omitted, likely because they are “obvious”. Often, you wade through the maze only to find the component doesn’t work anymore, or is not fit for your purpose.

Besides setup, the main problem is that HTML is not treated with the appropriate respect in the design of these components. They are not designed as closely as possible to standard HTML elements, but expect JS to be written for them to do anything. HTML is simply treated as a shorthand, or worse, as merely a marker to indicate where the element goes in the DOM, with all parameters passed in via JS. I recall a wonderful talk by Jeremy Keith a few years ago about this very phenomenon, where he discussed this e-shop Web components demo by Google, which is the poster child of this practice. These are the entire contents of its <body> element:

<body>

<shop-app unresolved="">SHOP</shop-app>

<script src="node_assets/@webcomponents/webcomponentsjs/webcomponents-loader.js"></script>

<script type="module" src="src/shop-app.js"></script>

<script>window.performance&&performance.mark&&performance.mark("index.html");</script>

</body>

If this is how Google is leading the way, how can we hope for contributors to design components that follow established HTML conventions?

Jeremy criticized this practice from the aspect of backwards compatibility: when JS is broken or not enabled, or the browser doesn’t support Web Components, the entire website is blank. While this is indeed a serious concern, my primary concern is one of usability: HTML is a lower barrier to entry language. Far more people can write HTML than JS. Even for those who do eventually write JS, it often comes after spending years writing HTML & CSS.

If components are designed in a way that requires JS, this excludes thousands of people from using them. And even for those who can write JS, HTML is often easier: you don’t see many people rolling their own sliders or using JS-based ones once <input type="range"> became widely supported, right?

Even when JS is unavoidable, it’s not black and white. A well designed HTML element can reduce the amount and complexity of JS needed to a minimum. Think of the <dialog> element: it usually does require *some* JS, but it’s usually rather simple JS. Similarly, the <video> element is perfectly usable just by writing HTML, and has a comprehensive JS API for anyone who wants to do fancy custom things.

The other day I was looking for a simple, dependency free, tabs component. You know, the canonical example of something that is easy to do with Web Components, the example 50% of tutorials mention. I didn’t even care what it looked like, it was for a testing interface. I just wanted something that is small and works like a normal HTML element. Yet, it proved so hard I ended up writing my own!

Can we fix this?

I’m not sure if this is a design issue, or a documentation issue. Perhaps for many of these web components, there are easier ways to use them. Perhaps there are vanilla web components out there that I just can’t find. Perhaps I’m looking in the wrong place and there is another directory somewhere with different goals and a different target audience.

But if not, and if I’m not alone in feeling this way, we need a directory of web components with strict inclusion criteria:

- Plug and play. No dependencies, no setup beyond including one

<script>tag. If a dependency is absolutely needed (e.g. in a map component it doesn’t make sense to draw your own maps), the component loads it automatically if it’s not already loaded. - Syntax and API follows conventions established by built-in HTML elements and anything that can be done without the component user writing JS, is doable without JS, per the W3C principle of least power.

- Accessible by default via sensible ARIA defaults, just like normal HTML elements.

- Themable via

::part(), selective inheritance and custom properties. Very minimal style by default. Normal CSS properties should just “work” to the the extent possible. - Only one component of a given type in the directory, that is flexible and extensible and continuously iterated on and improved by the community. Not 30 different sliders and 15 different tabs that users have to wade through. No branding, no silos of “component libraries”. Only elements that are designed as closely as possible to what a browser would implement in every way the current technology allows.

I would be up for working on this if others feel the same way, since that is not a project for one person to tackle. Who’s with me?

UPDATE: Wow this post blew up! Thank you all for your interest in participating in a potential future effort. I’m currently talking to stakeholders of some of the existing efforts to see if there are any potential collaborations before I go off and create a new one. Follow me on Twitter to hear about the outcome!

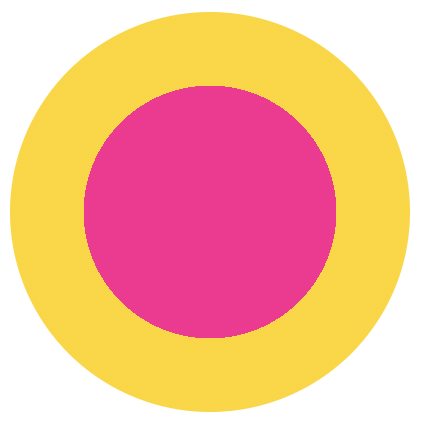

Developer priorities throughout their career

I made this chart in the amazing Excalidraw about two weeks ago:

It only took me 10 minutes! Shortly after, my laptop broke down into repeated kernel panics, and it spent about 10 days in service (I was in a remote place when it broke, so it took some time to get it to service). Yesterday, I was finally reunited with it, turned it on, launched Chrome, and saw it again. It gave me a smile, and I realized I never got to post it, so I tweeted this:

The tweet kinda blew up! It seems many, many developers identify with it. A few also disagreed with it, especially with the “Does it actually work?” line. So I figured I should write a bit about the rationale behind it. I originally wrote it in a tweet, but then I realized I should probably post it in a less transient medium, that is more well suited to longer text.

When somebody starts coding, getting the code to work is already difficult enough, so there is no space for other priorities. Learning to formalize one’s thought to the degree a computer demands, and then serialize this thinking with an unforgiving syntax, is hard. Writing code that works is THE priority, and whether it’s good code is not even a consideration.

For more experienced programmers, whether it works is ephemeral: today it works, tomorrow a commit causes a regression, the day after another commit fixes it (yes, even with TDD. No testsuite gets close to 100% coverage). Whereas readability & maintainability do not fluctuate much. If they are not prioritized from the beginning, they are much harder to accomplish when you already have a large codebase full of technical debt.

Code written by experienced programmers that doesn’t work, can often be fixed with hours or days of debugging. A nontrivial codebase that is not readable can take months or years to rewrite. So one tends to gravitate towards prioritizing what is easier to fix.

The “peak of drought” and other over-abstractions

Many developers identified with the “peak of drought”. Indeed, like other aspects of maintainability, DRY is not even a concern at first. At some point, a programmer learns about the importance of DRY and gradually begins abstracting away duplication. However, you can have too much of a good thing: soon the need to abstract away any duplication becomes all consuming and leads to absurd, awkward abstractions which actually get in the way and produce needless couplings, often to avoid duplicating very little code, once. In my own “peak of drought” (which lasted far longer than the graph above suggests), I’ve written many useless functions, with parameters that make no sense, just to avoid duplicating a few lines of code once.

Many articles have been written about this phenomenon, so I’m not going to repeat their arguments here. As a programmer accumulates even more experience, they start seeing the downsides of over-abstraction and over-normalization and start favoring a more moderate approach which prioritizes readability over DRY when they are at odds.

A similar thing happens with design patterns too. At some point, a few years in, a developer reads a book or takes a course about design patterns. Soon thereafter, their code becomes so littered with design patterns that it is practically incomprehensible. “When all you have is a hammer, everything looks like a nail”. I have a feeling that Java and Java-like languages are particularly accommodating to this ailment, so this phenomenon tends to proliferate in their codebases. At some point, the developer has to go back to their past code, and they realize themselves that it is unreadable. Eventually, they learn to use design patterns when they are actually useful, and favor readability over design patterns when the two are at odds.

What aspects of your coding practice have changed over the years? How has your perspective shifted? What mistakes of the past did you eventually realize?

Parsel: A tiny, permissive CSS selector parser

I’ve posted before about my work for the Web Almanac this year. To make it easier to calculate the stats about CSS selectors, we looked to use an existing selector parser, but most were too big and/or had dependencies or didn’t account for all selectors we wanted to parse, and we’d need to write our own walk and specificity methods anyway. So I did what I usually do in these cases: I wrote my own!

You can find it here: https://projects.verou.me/parsel/

It not only parses CSS selectors, but also includes methods to walk the AST produced, as well as calculate specificity as an array and convert it to a number for easy comparison.

It is one of my first libraries released as an ES module, and there are instructions about both using it as a module, and as a global, for those who would rather not deal with ES modules yet, because convenient as ESM are, I wouldn’t want to exclude those less familiar with modern JS.

Please try it out and report any bugs! We plan to use it for Almanac stats in the next few days, so if you can spot bugs sooner rather than later, you can help that volunteer effort. I’m primarily interested in (realistic) valid selectors that are parsed incorrectly. I’m aware there are many invalid selectors that are parsed weirdly, but that’s not a focus (hence the “permissive” aspect, there are many invalid selectors it won’t throw on, and that’s by design to keep the code small, the logic simple, and the functionality future-proof).

How it works

If you’re just interested in using this selector parser, read no further. This section is about how the parser works, for those interested in this kind of thing. :)

I first started by writing a typical parser, with character-by-character gobbling and different modes, with code somewhat inspired by my familiarity with jsep. I quickly realized that was a more fragile approach for what I wanted to do, and would result in a much larger module. I also missed the ease and flexibility of doing things with regexes.

However, since CSS selectors include strings and parens that can be nested, parsing them with regexes is a fool’s errand. Nested structures are not regular languages as my CS friends know. You cannot use a regex to find the closing parenthesis that corresponds to an opening parenthesis, since you can have other nested parens inside it. And it gets even more complex when there are other tokens that can nest, such as strings or comments. What if you have an opening paren that contains a string with a closing paren, like e.g. ("foo)")? A regex would match the closing paren inside the string. In fact, parsing the language of nested parens (strings like (()(()))) with regexes is one of the typical (futile) exercises in a compilers course. Students struggle to do it because it’s an impossible task, and learn the hard way that not everything can be parsed with regexes.

Unlike a typical programming language with lots of nested structures however, the language of CSS selectors is more limited. There are only two nested structures: strings and parens, and they only appear in specific types of selectors (namely attribute selectors, pseudo-classes and pseudo-elements). Once we get those out of the way, everything else can be easily parsed by regexes. So I decided to go with a hybrid approach: The selector is first looked at character-by-character, to extract strings and parens. We only extract top-level parens, since anything inside them can be parsed separately (when it’s a selector), or not at all. The strings are replaced by a single character, as many times as the length of the string, so that any character offsets do not change, and the strings themselves are stored in a stack. Same with parens.

After that point, this modified selector language is a regular language that can be parsed with regexes. To do so, I follow an approach inspired by the early days of Prism: An object literal of tokens in the order they should be matched in, and a function that tokenizes a string by iteratively matching tokens from an object literal. In fact, this function was taken from an early version of Prism and modified.

After we have the list of tokens as a flat array, we can restore strings and parens, and then nest them appropriately to create an AST.

Also note that the token regexes use the new-ish named capture groups feature in ES2018, since it’s now supported pretty widely in terms of market share. For wider support, you can transpile :)

Introspecting CSS via the CSS OM: Get supported properties, shorthands, longhands

For some of the statistics we are going to study for this year’s Web Almanac we may end up needing a list of CSS shorthands and their longhands. Now this is typically done by maintaining a data structure by hand or guessing based on property name structure. But I knew that if we were going to do it by hand, it’s very easy to miss a few of the less popular ones, and the naming rule where shorthands are a prefix of their longhands has failed to get standardized and now has even more exceptions than it used to. And even if we do an incredibly thorough job, next year the data structure will be inaccurate, because CSS and its implementations evolve fast. The browser knows what the shorthands are, surely we should be able to get the information from it …right? Then we could use it directly if this is a client-side library, or in the case of the Almanac, where code needs to be fast because it will run on millions of websites, paste the precomputed result into whatever script we run.

There are essentially two steps for this:

- Get a list of all CSS properties

- Figure out how to test if a given property is a shorthand and how to get its longhands if so.

I decided to tell this story in the inverse order. In my exploration, I first focused on figuring out shorthands (2), because I had coded getting a list of properties many times before, but since (1) is useful in its own right (and probably in more use cases), I felt it makes more sense to examine that first.

Note: I’m using document.body instead of a dummy element in these examples, because I like to experiment in about:blank, and it’s just there and because this way you can just copy stuff to the console and try it wherever, even right here while reading this post. However, if you use this as part of code that runs on a real website, it goes without saying that you should create and test things on a dummy element instead!

Getting a list of all CSS properties from the browser

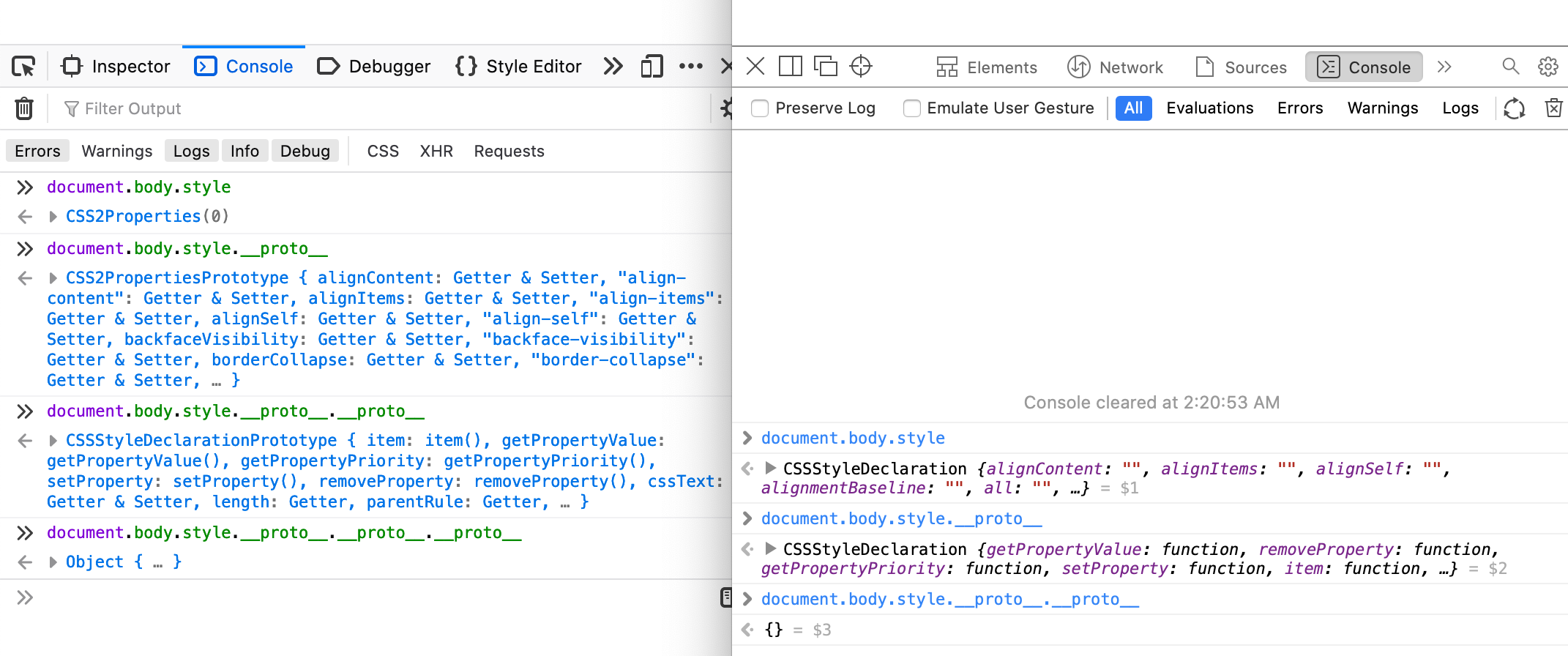

In Chrome and Safari, this is as simple as Object.getOwnPropertyNames(document.body.style). However, in Firefox, this doesn’t work. Why is that? To understand this (and how to work around it), we need to dig a bit deeper.

In Chrome and Safari, element.style is a CSSStyleDeclaration instance. In Firefox however, it is a CSS2Properties instance, which inherits from CSSStyleDeclaration. CSS2Properties is an older interface, defined in the DOM 2 Specification, which is now obsolete. In the current relevant specification, CSS2Properties is gone, and has been merged with CSSStyleDeclaration. However, Firefox hasn’t caught up yet.

Firefox on the left, Safari on the right. Chrome behaves like Safari.

Since the properties are on CSSStyleDeclaration, they are not own properties of element.style, so Object.getOwnPropertyNames() fails to return them. However, we can extract the CSSStyleDeclaration instance by using __proto__ or Object.getPrototypeOf(), and then Object.getOwnPropertyNames(Object.getPrototypeOf(document.body.style)) gives us what we want!

So we can combine the two to get a list of properties regardless of browser:

let properties = Object.getOwnPropertyNames(

style.hasOwnProperty("background")?

style : style.__proto__

);

And then, we just drop non-properties, and de-camelCase:

properties = properties.filter(p => style[p] === "") // drop functions etc

.map(prop => { // de-camelCase

prop = prop.replace(/[A-Z]/g, function($0) { return '-' + $0.toLowerCase() });

if (prop.indexOf("webkit-") > -1) {

prop = "-" + prop;

}

return prop;

});

You can see a codepen with the result here:

https://codepen.io/leaverou/pen/eYJodjb?editors=0010

Testing if a property is a shorthand and getting a list of longhands

The main things to note are:

- When you set a shorthand on an element’s inline style, you are essentially setting all its longhands.

element.styleis actually array-like, with numerical properties and.lengththat gives you the number of properties set on it. This means you can use the spread operator on it:

> document.body.style.background = "red";

> [...document.body.style]

< [

"background-image",

"background-position-x",

"background-position-y",

"background-size",

"background-repeat-x",

"background-repeat-y",

"background-attachment",

"background-origin",

"background-clip",

"background-color"

]

Interestingly, document.body.style.cssText serializes to background: red and not all the longhands.

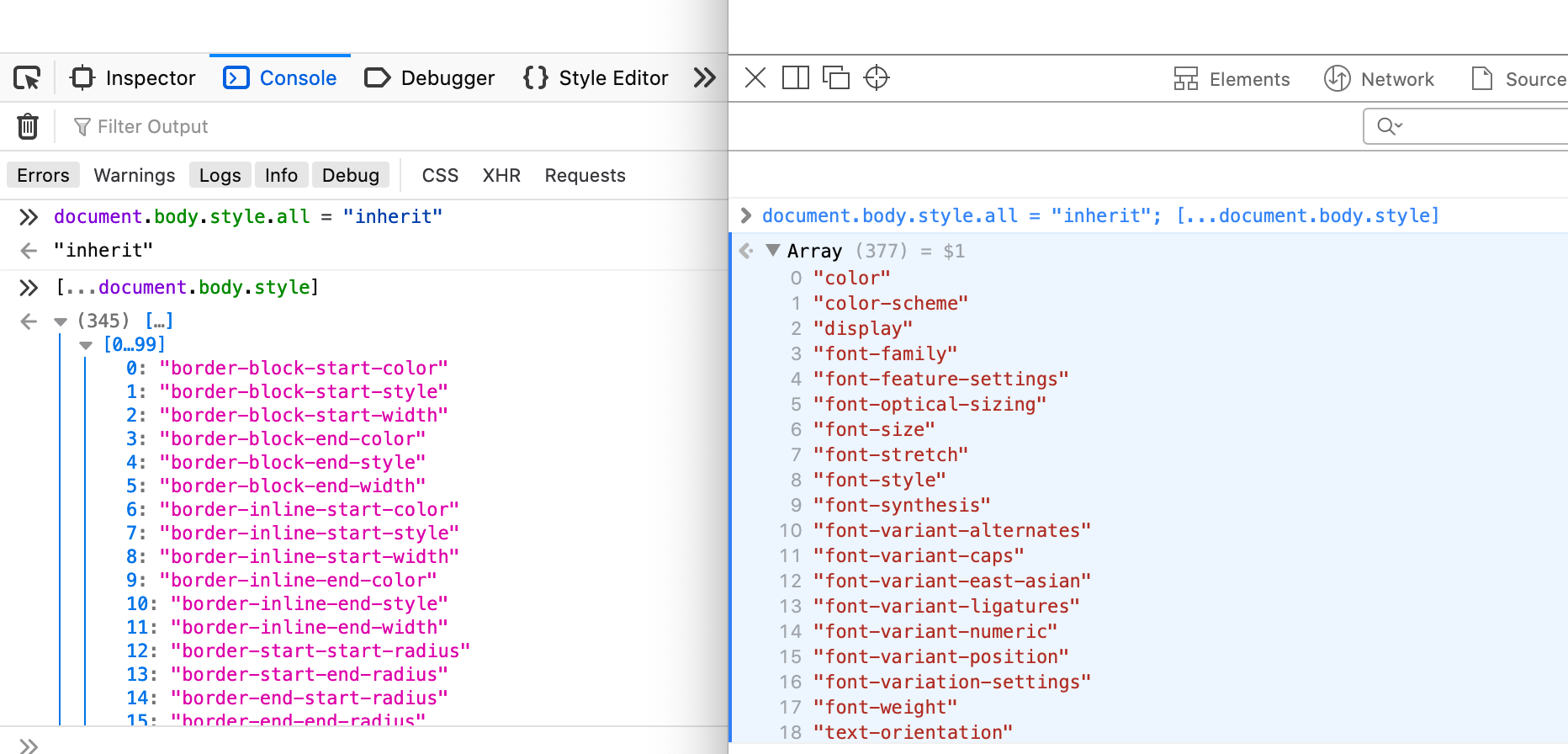

There is one exception: The all property. In Chrome, it does not quite behave as a shorthand:

> document.body.style.all = "inherit";

> [...document.body.style]

< ["all"]

Whereas in Safari and Firefox, it actually returns every single property that is not a shorthand!

Firefox and Safari expand all to literally all non-shorthand properties.

While this is interesting from a trivia point of view, it doesn’t actually matter for our use case, since we don’t typically care about all when constructing a list of shorthands, and if we do we can always add or remove it manually.

So, to recap, we can easily get the longhands of a given shorthand:

function getLonghands(property) {

let style = document.body.style;

style[property] = "inherit"; // a value that works in every property

let ret = [...style];

style.cssText = ""; // clean up

return ret;

}

Putting the pieces together

You can see how all the pieces fit together (and the output!) in this codepen:

https://codepen.io/leaverou/pen/gOPEJxz?editors=0010

How many of these shorthands did you already know?

Import non-ESM libraries in ES Modules, with client-side vanilla JS

In case you haven’t heard, ECMAScript modules (ESM) are now supported everywhere!

While I do have some gripes with them, it’s too late for any of these things to change, so I’m embracing the good parts and have cautiously started using them in new projects. I do quite like that I can just use import statements and dynamic import() for dependencies with URLs right from my JS, without module loaders, extra <script> tags in my HTML, or hacks with dynamic <script> tags and load events (in fact, Bliss has had a helper for this very thing that I’ve used extensively in older projects). I love that I don’t need any libraries for this, and I can use it client-side, anywhere, even in my codepens.

Once you start using ESM, you realize that most libraries out there are not written in ESM, nor do they include ESM builds. Many are still using globals, and those that target Node.js use CommonJS (CJS). What can we do in that case? Unfortunately, ES Modules are not really designed with any import (pun intended) mechanism for these syntaxes, but, there are some strategies we could employ.

Libraries using globals

Technically, a JS file can be parsed as a module even with no imports or exports. Therefore, almost any library that uses globals can be fair game, it can just be imported as a module with no exports! How do we do that?

While you may not see this syntax a lot, you don’t actually need to name anything in the import statement. There is a syntax to import a module entirely for its side effects:

import "url/to/library.js";

This syntax works fine for libraries that use globals, since declaring a global is essentially a side effect, and all modules share the same global scope. For this to work, the imported library needs to satisfy the following conditions:

- It should declare the global as a property on

window(orself), not viavar Fooorthis. In modules top-level variables are local to the module scope, andthisisundefined, so the last two ways would not work. - Its code should not violate strict mode

- The URL is either same-origin or CORS-enabled. While

<script>can run cross-origin resources,importsadly cannot.

Basically, you are running a library as a module that was never written with the intention to be run as a module. Many are written in a way that also works in a module context, but not all. ExploringJS has an excellent summary of the differences between the two. For example, here is a trivial codepen loading jQuery via this method.

Libraries using CJS without dependencies

I dealt with this today, and it’s what prompted this post. I was trying to play around with Rework CSS, a CSS parser used by the HTTPArchive for analyzing CSS in the wild. However, all its code and documentation assumes Node.js. If I could avoid it, I’d really rather not have to make a Node.js app to try this out, or have to dive in module loaders to be able to require CJS modules in the browser. Was there anything I could do to just run this in a codepen, no strings attached?

After a little googling, I found this issue. So there was a JS file I could import and get all the parser functionality. Except …there was one little problem. When you look at the source, it uses module.exports. If you just import that file, you predictably get an error that module is not defined, not to mention there are no ESM exports.

My first thought was to stub module as a global variable, import this as a module, and then read module.exports and give it a proper name:

window.module = {};

import "https://cdn.jsdelivr.net/gh/reworkcss/css@latest/lib/parse/index.js";

console.log(module.exports);

However, I was still getting the error that module was not defined. How was that possible?! They all share the same global context!! *pulls hair out* After some debugging, it dawned on me: static import statements are hoisted; the “module” was getting executed before the code that imports it and stubs module.

Dynamic imports to the rescue! import() is executed exactly where it’s called, and returns a promise. So this actually works:

window.module = {};

import("https://cdn.jsdelivr.net/gh/reworkcss/css@latest/lib/parse/index.js").then(_ => {

console.log(module.exports);

});

We could even turn it into a wee function, which I cheekily called require():

async function require(path) {

let _module = window.module;

window.module = {};

await import(path);

let exports = module.exports;

window.module = _module; // restore global

return exports;

}

(async () => { // top-level await cannot come soon enough…

let parse = await require("https://cdn.jsdelivr.net/gh/reworkcss/css@latest/lib/parse/index.js");

console.log(parse("body { color: red }"));

})();

You can fiddle with this code in a live pen here.

Do note that this technique will only work if the module you’re importing doesn’t import other CJS modules. If it does, you’d need a more elaborate require() function, which is left as an exercise for the reader. Also, just like the previous technique, the code needs to comply with strict mode and not be cross-origin.

A similar technique can be used to load AMD modules via import(), just stub define() and you’re good to go.

So, with this technique I was able to quickly whip up a ReworkCSS playground. You just edit the CSS in CodePen and see the resulting AST, and you can even fork it to share a specific AST with others! :)

https://codepen.io/leaverou/pen/qBbQdGG

Update: CJS with static imports

After this article was posted, a clever hack was pointed out to me on Twitter:

While this works great if you can have multiple separate files, it doesn’t work when you’re e.g. quickly trying out a pen. Data URIs to the rescue! Turns out you can import a module from a data URI!

So let’s adapt our Rework example to use this:

https://codepen.io/leaverou/pen/xxZmWvx

Addendum: ESM gripes

Since I was bound to get questions about what my gripes are with ESM, I figured I should mention them pre-emptively.

First off, a little context. Nearly all of the JS I write is for libraries. I write libraries as a hobby, I write libraries as my job, and sometimes I write libraries to help me do my job. My job is usability (HCI) research (and specifically making programming easier), so I’m very sensitive to developer experience issues. I want my libraries to be usable not just by seasoned developers, but by novices too.

ESM has not been designed with novices in mind. It evolved from the CJS/UMD/AMD ecosystem, in which most voices are seasoned developers.

My main gripe with them, is how they expect full adoption, and settle for nothing less. There is no way to create a bundle of a library that can be used both traditionally, with a global, or as an ES module. There is also no standard way to import older libraries, or libraries using other module patterns (yes, this very post is about doing that, but essentially these are hacks, and there should be a better way). I understand the benefits of static analysis for imports and exports, but I wish there was a dynamic alternative to export, analogous to the dynamic import().

In terms of migrating to ESM, I also dislike how opinionated they are: strict mode is great, but forcing it doesn’t help people trying to migrate older codebases. Restricting them to cross-origin is also a pain, using <script>s from other domains made it possible to quickly experiment with various libraries, and I would love for that to be true for modules too.

But overall, I’m excited that JS now natively supports a module mechanism, and I expect any library I release in the future to utilize it.

Releasing MaVoice: A free app to vote on repo issues

First off, some news: I agreed to be this year’s CSS content lead for the Web Almanac! One of the first things to do is to flesh out what statistics we should study to answer the question “What is the state of CSS in 2020?”. You can see last year’s chapter to get an idea of what kind of statistics could help answer that question.

Of course, my first thought was “We should involve the community! People might have great ideas of statistics we could study!”. But what should we use to vote on ideas and make them rise to the top?

I wanted to use a repo to manage all this, since I like all the conveniences for managing issues. However, there is not much on Github for voting. You can add 👍 reactions, but not sort by them, and voting itself is tedious: you need to open the comment, click on the reaction, then go back to the list of issues, rinse and repeat. Ideally, I wanted something like UserVoice™️, which lets you vote with one click, and sorts proposals by votes.

And then it dawned on me: I’ll just build a Mavo app on top of the repo issues, that displays them as proposals to be voted on and sorts by 👍 reactions, UserVoice™️-style but without the UserVoice™️ price tag. 😎 In fact, I had started such a Mavo app a couple years ago, and never finished or released it. So, I just dug it up and resurrected it from its ashes! It’s — quite fittingly I think — called MaVoice.

You can set it to any repo via the repo URL parameter, and any label via the labels URL param (defaults to enhancement) to create a customized URL for any repo you want in seconds! For example, here’s the URL for the css-almanac repo, which only displays issues with the label “proposed stat”: https://projects.verou.me/mavoice/?repo=leaverou/css-almanac&labels=proposed%20stat

While this did need some custom JS, unlike other Mavo apps which need none, I’m still pretty happy I could spin up this kind of app with < 100 lines of JS :)

Yes, it’s still rough around the edges, and I’m sure you can find many things that could be improved, but it does the job for now, and PRs are always welcome 🤷🏽♀️

The main caveat if you decide to use this for your own repo: Because (to my knowledge) Github API still does not provide a way to sort issues by 👍 reactions, or even reactions in general (in either the v3 REST API, or the GraphQL API), issues are instead requested sorted by comment count, and are sorted by 👍 reactions client-side, right before render. Due to API limitations, this API call can only fetch the top 100 results. This means that if you have more than 100 issues to display (i.e. more than 100 open issues with the given label), it could potentially be inaccurate, especially if you have issues with many reactions and few comments.

Another caveat is that because this is basically reactions on Github issues, there is no limit on how many issues someone can vote on. In theory, if they’re a bad actor (or just overexcited), they can just vote on everything. But I suppose that’s an intrinsic problem with using reactions to vote for things, having a UI for it just reveals the existing issue, it doesn’t create it.

Hope you enjoy, and don’t forget to vote on which CSS stats we should study!

The Cicada Principle, revisited with CSS variables

Many of today’s web crafters were not writing CSS at the time Alex Walker’s landmark article The Cicada Principle and Why it Matters to Web Designers was published in 2011. Last I heard of it was in 2016, when it was used in conjunction with blend modes to pseudo-randomize backgrounds even further.

So what is the Cicada Principle and how does it relate to web design in a nutshell? It boils down to: when using repeating elements (tiled backgrounds, different effects on multiple elements etc), using prime numbers for the size of the repeating unit maximizes the appearance of organic randomness. Note that this only works when the parameters you set are independent.

When I recently redesigned my blog, I ended up using a variation of the Cicada principle to pseudo-randomize the angles of code snippets. I didn’t think much of it until I saw this tweet:

This made me think: hey, maybe I should actually write a blog post about the technique. After all, the technique itself is useful for way more than angles on code snippets.

The main idea is simple: You write your main rule using CSS variables, and then use :nth-of-*() rules to set these variables to something different every N items. If you use enough variables, and choose your Ns for them to be prime numbers, you reach a good appearance of pseudo-randomness with relatively small Ns.

In the case of code samples, I only have two different top cuts (going up or going down) and two different bottom cuts (same), which produce 2*2 = 4 different shapes. Since I only had four shapes, I wanted to maximize the pseudo-randomness of their order. A first attempt looks like this:

pre {

clip-path: polygon(var(--clip-top), var(--clip-bottom));

--clip-top: 0 0, 100% 2em;

--clip-bottom: 100% calc(100% - 1.5em), 0 100%;

}

pre:nth-of-type(odd) {

–clip-top: 0 2em, 100% 0;

}

pre:nth-of-type(3n + 1) {

–clip-bottom: 100% 100%, 0 calc(100% - 1.5em);

}

This way, the exact sequence of shapes repeats every 2 * 3 = 6 code snippets. Also, the alternative --clip-bottom doesn’t really get the same visibility as the others, being present only 33.333% of the time. However, if we just add one more selector:

pre {

clip-path: polygon(var(--clip-top), var(--clip-bottom));

--clip-top: 0 0, 100% 2em;

--clip-bottom: 100% calc(100% - 1.5em), 0 100%;

}

pre:nth-of-type(odd) {

–clip-top: 0 2em, 100% 0;

}

pre:nth-of-type(3n + 1),

pre:nth-of-type(5n + 1) {

–clip-bottom: 100% 100%, 0 calc(100% - 1.5em);

}

Now the exact same sequence of shapes repeats every 2 * 3 * 5 = 30 code snippets, probably way more than I will have in any article. And it’s more fair to the alternate --clip-bottom, which now gets 1/3 + 1/5 - 1/15 = 46.67%, which is almost as much as the alternate --clip-top gets!

You can explore this effect in this codepen:

https://codepen.io/leaverou/pen/8541bfd3a42551f8845d668f29596ef9?editors=1100

Or, to better explore how different CSS creates different pseudo-randomness, you can use this content-less version with three variations:

https://codepen.io/leaverou/pen/NWxaPVx

Of course, the illusion of randomness is much better with more shapes, e.g. if we introduce a third type of edge we get 3 * 3 = 9 possible shapes:

https://codepen.io/leaverou/pen/dyGmbJJ?editors=1100

I also used primes 7 and 11, so that the sequence repeats every 77 items. In general, the larger primes you use, the better the illusion of randomness, but you need to include more selectors, which can get tedious.

Other examples

So this got me thinking: What else would this technique be cool on? Especially if we include more values as well, we can pseudo-randomize the result itself better, and not just the order of only 4 different results.

So I did a few experiments.

Pseudo-randomized color swatches

https://codepen.io/leaverou/pen/NWxXQKX

Pseudo-randomized color swatches, with variables for hue, saturation, and lightness.

https://codepen.io/leaverou/pen/RwrLPer

Which one looks more random? Why do you think that is?

Pseudo-randomized border-radius

Admittedly, this one can be done with just longhands, but since I realized this after I had already made it, I figured eh, I may as well include it 🤷🏽♀️

https://codepen.io/leaverou/pen/ZEQXOrd

It is also really cool when combined with pseudo-random colors (just hue this time):

https://codepen.io/leaverou/pen/oNbGzeE

Pseudo-randomized snowfall

Lots of things here:

- Using translate and transform together to animate them separately without resorting to CSS.registerPropery()

- Pseudo-randomized horizontal offset, animation-delay, font-size

- Technically we don’t need CSS variables to pseudo-randomize

font-size, we can just set the property itself. However, variables enable us to pseudo-randomize it via a multiplier, in order to decouple the base font size from the pseudo-randomness, so we can edit them independently. And then we can use the same multiplier inanimation-durationto make smaller snowflakes fall slower!

https://codepen.io/leaverou/pen/YzwrWvV?editors=1100

Conclusions

In general, the larger the primes you use, the better the illusion of randomness. With smaller primes, you will get more variation, but less appearance of randomness.

There are two main ways to use primes to create the illusion of randomness with :nth-child() selectors:

The first way is to set each trait on :nth-child(pn + b) where p is a prime that increases with each value and b is constant for each trait, like so:

:nth-child(3n + 1) { property1: value11; }

:nth-child(5n + 1) { property1: value12; }

:nth-child(7n + 1) { property1: value13; }

:nth-child(11n + 1) { property1: value14; }

...

:nth-child(3n + 2) { property2: value21; }

:nth-child(5n + 2) { property2: value22; }

:nth-child(7n + 2) { property2: value23; }

:nth-child(11n + 2) { property2: value24; }

...

The benefit of this approach is that you can have as few or as many values as you like. The drawback is that because primes are sparse, and become sparser as we go, you will have a lot of “holes” where your base value is applied.

The second way (which is more on par with the original Cicada principle) is to set each trait on :nth-child(pn + b) where p is constant per trait, and b increases with each value:

:nth-child(5n + 1) { property1: value11; }

:nth-child(5n + 2) { property1: value12; }

:nth-child(5n + 3) { property1: value13; }

:nth-child(5n + 4) { property1: value14; }

...

:nth-child(7n + 1) { property2: value21; }

:nth-child(7n + 2) { property2: value22; }

:nth-child(7n + 3) { property2: value23; }

:nth-child(7n + 4) { property2: value24; }

...

This creates a better overall impression of randomness (especially if you order the values in a pseudo-random way too) without “holes”, but is more tedious, as you need as many values as the prime you’re using.

What other cool examples can you think of?

Refactoring optional chaining into a large codebase: lessons learned

Chinese translation by Coink Wang

Now that optional chaining is supported across the board, I decided to finally refactor Mavo to use it (yes, yes, we do provide a transpiled version as well for older browsers, settle down). This is a moment I have been waiting for a long time, as I think optional chaining is the single most substantial JS syntax improvement since arrow functions and template strings. Yes, I think it’s more significant than async/await, just because of the mere frequency of code it improves. Property access is literally everywhere.

First off, what is optional chaining, in case you haven’t heard of it before?

You know how you can’t just do foo.bar.baz() without checking if foo exists, and then if foo.bar exists, and then if foo.bar.baz exists because you’ll get an error? So you have to do something awkward like:

if (foo && foo.bar && foo.bar.baz) {

foo.bar.baz();

}

Or even:

foo && foo.bar && foo.bar.baz && foo.bar.baz();

Some even contort object destructuring to help with this. With optional chaining, you can just do this:

foo?.bar?.baz?.()

It supports normal property access, brackets (foo?.[bar]), and even function invocation (foo?.()). Sweet, right??

Yes, mostly. Indeed, there is SO MUCH code that can be simplified with it, it’s incredible. But there are a few caveats.

Patterns to search for

Suppose you decided to go ahead and refactor your code as well. What to look for?

There is of course the obvious foo && foo.bar that becomes foo?.bar.

There is also the conditional version of it, that we described in the beginning of this article, which uses if() for some or all of the checks in the chain.

There are also a few more patterns.

Ternary

foo? foo.bar : defaultValue

Which can now be written as:

foo?.bar || defaultValue

or, using the other awesome new operator, the nullish coalescing operator:

foo?.bar ?? defaultValue

Array checking

if (foo.length > 3) {

foo[2]

}

which now becomes:

foo?.[2]

Note that this is no substitute for a real array check, like the one done by Array.isArray(foo). Do not go about replacing proper array checking with duck typing because it’s shorter. We stopped doing that over a decade ago.

Regex match

Forget about things like this:

let match = "#C0FFEE".match(/#([A-Z]+)/i);

let hex = match && match[1];

Or even things like that:

let hex = ("#C0FFEE".match(/#([A-Z]+)/i) || [,])[1];

Now it’s just:

let hex = "#C0FFEE".match(/#([A-Z]+)/i)?.[1];

In our case, I was able to even remove two utility functions and replace their invocations with this.

Feature detection

In simple cases, feature detection can be replaced by ?.. For example:

if (element.prepend) element.prepend(otherElement);

becomes:

element.prepend?.(otherElement);

Don’t overdo it

While it may be tempting to convert code like this:

if (foo) {

something(foo.bar);

somethingElse(foo.baz);

andOneLastThing(foo.yolo);

}

to this:

something(foo?.bar);

somethingElse(foo?.baz);

andOneLastThing(foo?.yolo);

Don’t. You’re essentially having the JS runtime check foo three times instead of one. You may argue these things don’t matter much anymore performance-wise, but it’s the same repetition for the human reading your code: they have to mentally process the check for foo three times instead of one. And if they need to add another statement using property access on foo, they need to add yet another check, instead of just using the conditional that’s already there.

Caveats

You still need to check before assignment

You may be tempted to convert things like:

if (foo && foo.bar) {

foo.bar.baz = someValue;

}

to:

foo?.bar?.baz = someValue;

Unfortunately, that’s not possible and will error. This was an actual snippet from our codebase:

if (this.bar && this.bar.edit) {

this.bar.edit.textContent = this._("edit");

}

Which I happily refactored to:

if (this.bar?.edit) {

this.bar.edit.textContent = this._("edit");

}

All good so far, this works nicely. But then I thought, wait a second… do I need the conditional at all? Maybe I can just do this:

this.bar?.edit?.textContent = this._("edit");

Nope. Uncaught SyntaxError: Invalid left-hand side in assignment. Can’t do that. You still need the conditional. I literally kept doing this, and I’m glad I had ESLint in my editor to warn me about it without having to actually run the code.

It’s very easy to put the ?. in the wrong place or forget some ?.

Note that if you’re refactoring a long chain with optional chaining, you often need to insert multiple ?. after the first one, for every member access that may or may not exist, otherwise you will get errors once the optional chaining returns undefined.

Or, sometimes you may think you do, because you put the ?. in the wrong place.

Take the following real example. I originally refactored this:

this.children[index]? this.children[index].element : this.marker

into this:

this.children?.[index].element ?? this.marker

then got a TypeError: Cannot read property 'element' of undefined. Oops! Then I fixed it by adding an additional ?.:

this.children?.[index]?.element ?? this.marker

This works, but is superfluous, as pointed out in the comments. I just needed to move the ?.:

this.children.[index]?.element ?? this.marker

Note that as pointed out in the comments be careful about replacing array length checks with optional access to the index. This might be bad for performance, because out-of-bounds access on an array is de-optimizing the code in V8 (as it has to check the prototype chain for such a property too, not only decide that there is no such index in the array).

It can introduce bugs if you’re not careful

If, like me, you go on a refactoring spree, it’s easy after a certain point to just introduce optional chaining in places where it actually ends up changing what your code does and introducing subtle bugs.

null vs undefined

Possibly the most common pattern is replacing foo && foo.bar with foo?.bar. While in most cases these work equivalently, this is not true for every case. When foo is null, the former returns null, whereas the latter returns undefined. This can cause bugs to creep up in cases where the distinction matters and is probably the most common way to introduce bugs with this type of refactoring.

Equality checks

Be careful about converting code like this:

if (foo && bar && foo.prop1 === bar.prop2) { /* ... */ }

to code like this:

if (foo?.prop1 === bar?.prop2) { /* ... */ }

In the first case, the condition will not be true, unless both foo and bar are truthy. However, in the second case, if both foo and bar are nullish, the conditional will be true, because both operands will return undefined!

The same bug can creep in even if the second operand doesn’t include any optional chaining, as long as it could be undefined you can get unintended matches.

Operator precedence slips

One thing to look out for is that optional chaining has higher precedence than &&. This becomes particularly significant when you replace an expression using && that also involves equality checks, since the (in)equality operators are sandwiched between ?. and &&, having lower precedence than the former and higher than the latter.

if (foo && foo.bar === baz) { /* ... */ }

What is compared with baz here? foo.bar or foo && foo.bar? Since && has lower precedence than ===, it’s as if we had written:

if (foo && (foo.bar === baz)) { /* ... */ }

Note that the conditional cannot ever be executed if foo is falsy. However, once we refactor it to use optional chaining, it is now as if we were comparing (foo && foo.bar) to baz:

if (foo?.bar === baz) { /* ... */ }

An obvious case where the different semantics affect execution is when baz is undefined. In that case, we can enter the conditional when foo is nullish, since then optional chaining will return undefined, which is basically the case we described above. In most other cases this doesn’t make a big difference. It can however be pretty bad when instead of an equality operator, you have an inequality operator, which still has the same precedence. Compare this:

if (foo && foo.bar !== baz) { /* ... */ }

with this:

if (foo?.bar !== baz) { /* ... */ }

Now, we are going to enter the conditional every time foo is nullish, as long as baz is not undefined! The difference is not noticeable in an edge case anymore, but in the average case! 😱

Return statements

Rather obvious after you think about it, but it’s easy to forget return statements in the heat of the moment. You cannot replace things like this:

if (foo && foo.bar) {

return foo.bar();

}

with:

return foo?.bar?.();

In the first case, you return conditionally, whereas in the second case you return always. This will not introduce any issues if the conditional is the last statement in your function, but it will change the control flow if it’s not.

Sometimes, it can fix bugs too!

Take a look at this code I encountered during my refactoring:

/**

* Get the current value of a CSS property on an element

*/

getStyle: (element, property) => {

if (element) {

var value = getComputedStyle(element).getPropertyValue(property);

if (value) {

return value.trim();

}

}

},

Can you spot the bug? If value is an empty string (and given the context, it could very well be), the function will return undefined, because an empty string is falsy! Rewriting it to use optional chaining fixes this:

if (element) {

var value = getComputedStyle(element).getPropertyValue(property);

return value?.trim();

}

Now, if value is the empty string, it will still return an empty string and it will only return undefined when value is nullish.

Finding usages becomes trickier

This was pointed out by Razvan Caliman on Twitter:

Bottom line

In the end, this refactor made Mavo about 2KB lighter and saved 37 lines of code. It did however make the transpiled version 79 lines and 9KB (!) heavier.

Here is the relevant commit, for your perusal. I tried my best to exercise restraint and not introduce any unrelated refactoring in this commit, so that the diff is chock-full of optional chaining examples. It has 104 additions and 141 deletions, so I’d wager it has about 100 examples of optional chaining in practice. Hope it’s helpful!

Hybrid positioning with CSS variables and max()

Notice how the navigation on the left behaves wrt scrolling: It’s like absolute at first that becomes fixed once the header scrolls out of the viewport.

One of my side projects these days is a color space agnostic color conversion & manipulation library, which I’m developing together with my husband, Chris Lilley (you can see a sneak peek of its docs above). He brings his color science expertise to the table, and I bring my JS & API design experience, so it’s a great match and I’m really excited about it! (if you’re serious about color and you’re building a tool or demo that would benefit from it contact me, we need as much early feedback on the API as we can get! )

For the documentation, I wanted to have the page navigation on the side (when there is enough space), right under the header when scrolled all the way to the top, but I wanted it to scroll with the page (as if it was absolutely positioned) until the header is out of view, and then stay at the top for the rest of the scrolling (as if it used fixed positioning).

It sounds very much like a case for position: sticky, doesn’t it? However, an element with position: sticky behaves like it’s relatively positioned when it’s in view and like it’s using position: fixed when its scrolled out of view but its container is still in view. What I wanted here was different. I basically wanted position: absolute while the header was in view and position: fixed after. Yes, there are ways I could have contorted position: sticky to do what I wanted, but was there another solution?

In the past, we’d just go straight to JS, slap position: absolute on our element, calculate the offset in a scroll event listener and set a top CSS property on our element. However, this is flimsy and violates separation of concerns, as we now need to modify Javascript to change styling. Pass!

What if instead we had access to the scroll offset in CSS? Would that be sufficient to solve our use case? Let’s find out!

As I pointed out in my Increment article about CSS Variables last month, and in my CSS Variables series of talks a few years ago, we can use JS to set & update CSS variables on the root that describe pure data (mouse position, input values, scroll offset etc), and then use them as-needed throughout our CSS, reaching near-perfect separation of concerns for many common cases. In this case, we write 3 lines of JS to set a --scrolltop variable:

let root = document.documentElement;

document.addEventListener("scroll", evt => {

root.style.setProperty("--scrolltop", root.scrollTop);

});

Then, we can position our navigation absolutely, and subtract var(--scrolltop) to offset any scroll (11rem is our header height):

#toc {

position: fixed;

top: calc(11rem - var(--scrolltop) * 1px);

}

This works up to a certain point, but once scrolltop exceeds the height of the header, top becomes negative and our navigation starts drifting off screen:

Just subtracting --scrolltop essentially implements absolute positioning with position: fixed.

We’ve basically re-implemented absolute positioning with position: fixed, which is not very useful! What we really want is to cap the result of the calculation to 0 so that our navigation always remains visible. Wouldn’t it be great if there was a max-top attribute, just like max-width so that we could do this?

One thought might be to change the JS and use Math.max() to cap --scrolltop to a specific number that corresponds to our header height. However, while this would work for this particular case, it means that --scrolltop cannot be used generically anymore, because it’s tailored to our specific use case and does not correspond to the actual scroll offset. Also, this encodes more about styling in the JS than is ideal, since the clamping we need is presentation-related — if our style was different, we may not need it anymore. But how can we do this without resorting to JS?

Thankfully, we recently got implementations for probably the one feature I was pining for the most in CSS, for years: min(), max() and clamp() functions, which bring the power of min/max constraints to any CSS property! And even for width and height, they are strictly more powerful than min/max-* because you can have any number of minimums and maximums, whereas the min/max-* properties limit you to only one.

While brower compatibility is actually pretty good, we can’t just use it with no fallback, since this is one of the features where lack of support can be destructive. We will provide a fallback in our base style and use @supports to conditonally override it:

#toc {

position: fixed;

top: 11em;

}

@supports (top: max(1em, 1px)) {

#toc {

top: max(0em, 11rem - var(–scrolltop) * 1px);

}

}

Aaand that was it, this gives us the result we wanted!

And because --scrolltop is sufficiently generic, we can re-use it anywhere in our CSS where we need access to the scroll offset. I’ve actually used exactly the scame --scrolltop setting JS code in my blog, to keep the gradient centerpoint on my logo while maintaining a fixed background attachment, so that various elements can use the same background and having it appear continuous, i.e. not affected by their own background positioning area:

The website header and the post header are actually different element. The background appears continuous because it’s using background-attachment: fixed, and the scrolltop variable is used to emulate background-attachment: scroll while still using the viewport as the background positioning area for both backgrounds.

Appendix: Why didn’t we just use the cascade?

You might wonder, why do we even need @supports? Why not use the cascade, like we’ve always done to provide fallbacks for values without sufficiently universal support? I.e., why not just do this:

#toc {

position: fixed;

top: 11em;

top: max(0em, 11rem - var(--scrolltop) * 1px);

}

The reason is that when you use CSS variables, this does not work as expected. The browser doesn’t know if your property value is valid until the variable is resolved, and by then it has already processed the cascade and has thrown away any potential fallbacks.

So, what would happen if we went this route and max() was not supported? Once the browser realizes that the second value is invalid due to using an unknown function, it will make the property invalid at computed value time, which essentially equates to the initial keyword, and for the top property, the initial value is 0. This would mean your navigation would overlap the header when scrolled close to the top, which is terrible!