On compliance vs readability: Generating text colors with CSS

Can we emulate the upcoming CSS contrast-color() function via CSS features that have already widely shipped?

And if so, what are the tradeoffs involved and how to best balance them?

Relative Colors

Out of all the CSS features I have designed, Relative Colors aka Relative Color Syntax (RCS) is definitely among the ones I’m most proud of. In a nutshell, they allow CSS authors to derive a new color from an existing color value by doing arbitrary math on color components in any supported color space:

--color-lighter: hsl(from var(--color) h s calc(l * 1.2));

--color-lighterer: oklch(from var(--color) calc(l + 0.2) c h);

--color-alpha-50: oklab(from var(--color) l a b / 50%);

The elevator pitch was that by allowing lower level operations they provide authors flexibility on how to derive color variations, giving us more time to figure out what the appropriate higher level primitives should be.

As of May 2024, RCS has shipped in every browser except Firefox. but given that it is an Interop 2024 focus area, that Firefox has expressed a positive standards position, and that the Bugzilla issue has had some recent activity and has been assigned, I am optimistic it would ship in Firefox soon. My guess it that it would become Baseline by the end of 2024.

Even if my prediction is off, it already is available to 83% of users worldwide,

and if you sort its caniuse page by usage,

you will see the vast majority of the remaining 17% doesn’t come from Firefox,

but from older Chrome and Safari versions.

I think its current market share warrants production use today,

as long as we use @supports to make sure things work in non-supporting browsers, even if less pretty.

Most Relative Colors tutorials revolve around its primary driving use cases: making tints and shades or other color variations by tweaking a specific color component up or down, and/or overriding a color component with a fixed value, like the example above. While this does address some very common pain points, it is merely scratching the surface of what RCS enables. This article explores a more advanced use case, with the hope that it will spark more creative uses of RCS in the wild.

The CSS contrast-color() function

One of the big longstanding CSS pain points is that it’s impossible to automatically specify a text color that is guaranteed to be readable on arbitrary backgrounds, e.g. white on darker colors and black on lighter ones.

Why would one need that? The primary use case is when colors are outside the CSS author’s control. This includes:

- User-defined colors. An example you’re likely familiar with: GitHub labels. Think of how you select an arbitrary color when creating a label and GitHub automatically picks the text color — often poorly (we’ll see why in a bit)

- Colors defined by another developer. E.g. you’re writing a web component that supports certain CSS variables for styling. You could require separate variables for the text and background, but that reduces the usability of your web component by making it more of a hassle to use. Wouldn’t it be great if it could just use a sensible default, that you can, but rarely need to override?

- Colors defined by an external design system, like Open Props, Material Design, or even (gasp) Tailwind.

Even in a codebase where every line of CSS code is controlled by a single author, reducing couplings can improve modularity and facilitate code reuse.

The good news is that this is not going to be a pain point for much longer.

The CSS function contrast-color() was designed to address exactly that.

This is not new, you may have heard of it as color-contrast() before, an earlier name.

I recently drove consensus to scope it down to an MVP that addresses the most prominent pain points and can actually ship soonish,

as it circumvents some very difficult design decisions that had caused the full-blown feature to stall.

I then added it to the spec per WG resolution, though some details still need to be ironed out.

Usage will look like this:

background: var(--color);

color: contrast-color(var(--color));

Glorious, isn’t it? Of course, soonish in spec years is still, well, years. As a data point, you can see in my past spec work that with a bit of luck (and browser interest), it can take as little as 2 years to get a feature shipped across all major browsers after it’s been specced. When the standards work is also well-funded, there have even been cases where a feature went from conception to baseline in 2 years, with Cascade Layers being the poster child for this: proposal by Miriam in Oct 2019, shipped in every major browser by Mar 2022. But 2 years is still a long time (and there are no guarantees it won’t be longer). What is our recourse until then?

As you may have guessed from the title, the answer is yes.

It may not be pretty, but there is a way to emulate contrast-color() (or something close to it) using Relative Colors.

Using RCS to automatically compute a contrasting text color

In the following we will use the OKLCh color space, which is the most perceptually uniform polar color space that CSS supports.

Let’s assume there is a Lightness value above which black text is guaranteed to be readable regardless of the chroma and hue, and below which white text is guaranteed to be readable. We will validate that assumption later, but for now let’s take it for granted. In the rest of this article, we’ll call that value the threshold and represent it as Lthreshold.

We will compute this value more rigously in the next section (and prove that it actually exists!),

but for now let’s use 0.7 (70%).

We can assign it to a variable to make it easier to tweak:

--l-threshold: 0.7;

Let’s work backwards from the desired result. We want to come up with an expression that is composed of widely supported CSS math functions, and will return 1 if L ≤ Lthreshold and 0 otherwise. If we could write such an expression, we could then use that value as the lightness of a new color:

--l: /* ??? */;

color: oklch(var(--l) 0 0);

How could we simplify the task?

One way is to relax what our expression needs to return.

We don’t actually need an exact 0 or 1

If we can manage to find an expression that will give us 0 when L > Lthreshold

and > 1 when L ≤ Lthreshold,

we can just use clamp(0, /* expression */, 1) to get the desired result.

One idea would be to use ratios, as they have this nice property where they are > 1 if the numerator is larger than the denominator and ≤ 1 otherwise.

The ratio of

Putting it all together, it looks like this:

--l-threshold: 0.7;

--l: clamp(0, (var(--l-threshold) / l - 1) * infinity, 1);

color: oklch(from var(--color) var(--l) 0 h);

One worry might be that if L gets close enough to the threshold we could get a number between 0 - 1, but in my experiments this never happened, presumably since precision is finite.

Fallback for browsers that don’t support RCS

The last piece of the puzzle is to provide a fallback for browsers that don’t support RCS.

We can use @supports with any color property and any relative color value as the test, e.g.:

.contrast-color {

/* Fallback */

background: hsl(0 0 0 / 50%);

color: white;

@supports (color: oklch(from red l c h)) {

–l: clamp(0, (var(–l-threshold) / l - 1) * infinity, 1);

color: oklch(from var(–color) var(–l) 0 h);

background: none;

}

}

In the spirit of making sure things work in non-supporting browsers, even if less pretty, some fallback ideas could be:

- A white or semi-transparent white background with black text or vice versa.

-webkit-text-strokewith a color opposite to the text color. This works better with bolder text, since half of the outline is inside the letterforms.- Many

text-shadowvalues with a color opposite to the text color. This works better with thinner text, as it’s drawn behind the text.

Does this mythical L threshold actually exist?

In the previous section we’ve made a pretty big assumption: That there is a Lightness value (Lthreshold) above which black text is guaranteed to be readable regardless of the chroma and hue, and below which white text is guaranteed to be readable regardless of the chroma and hue. But does such a value exist? It is time to put this claim to the test.

When people first hear about perceptually uniform color spaces like Lab, LCH or their improved versions, OkLab and OKLCH, they imagine that they can infer the contrast between two colors by simply comparing their L(ightness) values. This is unfortunately not true, as contrast depends on more factors than perceptual lightness. However, there is certainly significant correlation between Lightness values and contrast.

At this point, I should point out that while most web designers are aware of the WCAG 2.1 contrast algorithm, which is part of the Web Content Accessibility Guidelines and baked into law in many countries, it has been known for years that it produces extremely poor results. So bad in fact that in some tests it performs almost as bad as random chance for any color that is not very light or very dark. There is a newer contrast algorithm, APCA that produces far better results, but is not yet part of any standard or legislation, and there have previously been some bumps along the way with making it freely available to the public (which seem to be largely resolved).

So where does that leave web authors? In quite a predicament as it turns out. It seems that the best way to create accessible color pairings right now is a two step process:

- Use APCA to ensure actual readability

- Compliance failsafe: Ensure the result does not actively fail WCAG 2.1.

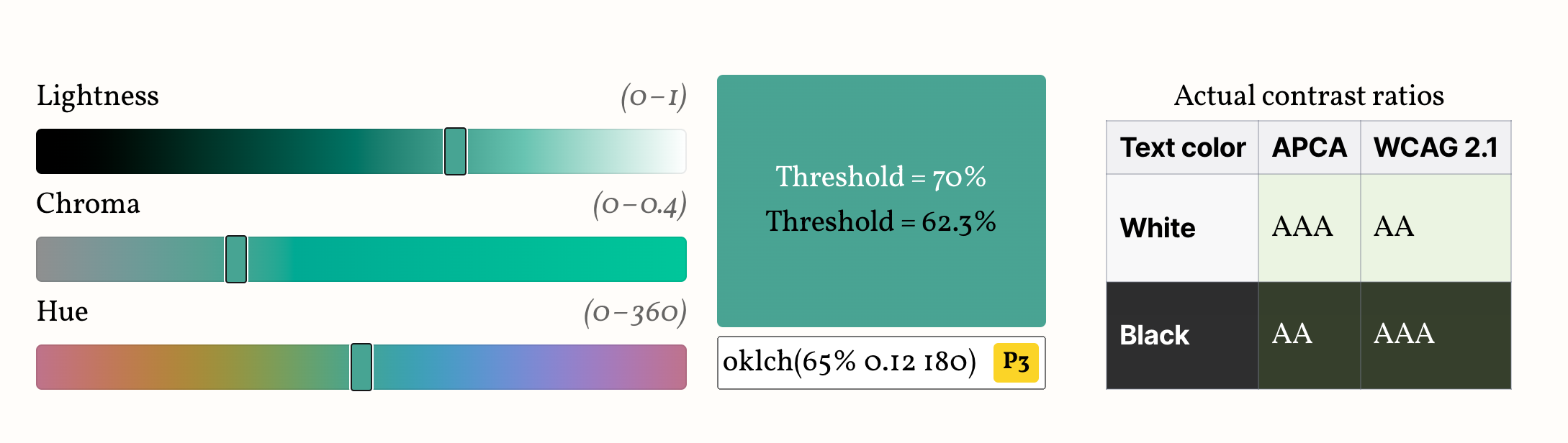

I ran some quick experiments using Color.js where I iterate over the OKLCh reference range (loosely based on the P3 gamut) in increments of increasing granularity and calculate the lightness ranges for colors where white was the “best” text color (= produced higher contrast than black) and vice versa. I also compute the brackets for each level (fail, AA, AAA, AAA+) for both APCA and WCAG.

I then turned my exploration into an interactive playground where you can run the same experiments yourself, potentially with narrower ranges that fit your use case, or with higher granularity.

This is the table produced with C ∈ [0, 0.4] (step = 0.025) and H ∈ [0, 360) (step = 1):

| Text color | Level | APCA | WCAG 2.1 | ||

|---|---|---|---|---|---|

| Min | Max | Min | Max | ||

| white | best | 0% | 75.2% | 0% | 61.8% |

| fail | 71.6% | 100% | 62.4% | 100% | |

| AA | 62.7% | 80.8% | 52.3% | 72.1% | |

| AAA | 52.6% | 71.7% | 42% | 62.3% | |

| AAA+ | 0% | 60.8% | 0% | 52.7% | |

| black | best | 66.1% | 100% | 52% | 100% |

| fail | 0% | 68.7% | 0% | 52.7% | |

| AA | 60% | 78.7% | 42% | 61.5% | |

| AAA | 69.4% | 87.7% | 51.4% | 72.1% | |

| AAA+ | 78.2% | 100% | 62.4% | 100% | |

Note that these are the min and max L values for each level. E.g. the fact that white text can fail WCAG when L ∈ [62.4%, 100%] doesn’t mean that every color with L > 62.4% will fail WCAG, just that some do. So, we can only draw meaningful conclusions by inverting the logic: Since all white text failures are have an L ∈ [62.4%, 100%], it logically follows that if L < 62.4%, white text will pass WCAG regardless of what the color is.

By applying this logic to all ranges, we can draw similar guarantees for many of these brackets:

| 0% to 52.7% | 52.7% to 62.4% | 62.4% to 66.1% | 66.1% to 68.7% | 68.7% to 71.6% | 71.6% to 75.2% | 75.2% to 100% | ||

|---|---|---|---|---|---|---|---|---|

| Compliance WCAG 2.1 | white | ✅ AA | ✅ AA | |||||

| black | ✅ AA | ✅ AAA | ✅ AAA | ✅ AAA | ✅ AAA | ✅ AAA+ | ||

| Readability APCA | white | 😍 Best | 😍 Best | 😍 Best | 🙂 OK | 🙂 OK | ||

| black | 🙂 OK | 🙂 OK | 😍 Best | |||||

You may have noticed that in general, WCAG has a lot of false negatives around white text, and tends to place the Lightness threshold much lower than APCA. This is a known issue with the WCAG algorithm.

Therefore, to best balance readability and compliance, we should use the highest threshold we can get away with. This means:

- If passing WCAG is a requirement, the highest threshold we can use is 62.3%.

- If actual readability is our only concern, we can safely ignore WCAG and pick a threshold somewhere between 68.7% and 71.6%, e.g. 70%.

Here’s a demo so you can see how they both play out. Edit the color below to see how the two thresholds work in practice, and compare with the actual contrast brackets, shown on the table on the left.

Your browser does not support Relative Color Syntax, so the demo below will not work.

This is what it looks like in a supporting browser:

| Text color | APCA | WCAG 2.1 |

|---|---|---|

| White | ||

| Black |

Avoid colors marked “P3+”, “PP” or “PP+”, as these are almost certainly outside your screen gamut, and browsers currently do not gamut map properly, so the visual result will be off.

Note that if your actual color is more constrained (e.g. a subset of hues or chromas or a specific gamut), you might be able to balance these tradeoffs better by using a different threshold. Run the experiment yourself with your actual range of colors and find out!

Here are some examples of narrower ranges I have tried and the highest threshold that still passes WCAG 2.1:

| Description | Color range | Threshold |

|---|---|---|

| Modern low-end screens | Colors within the sRGB gamut | 65% |

| Modern high-end screens | Colors within the P3 gamut | 64.5% |

| Future high-end screens | Colors within the Rec.2020 gamut | 63.4% |

| Neutrals | C ∈ [0, 0.03] | 67% |

| Muted colors | C ∈ [0, 0.1] | 65.6% |

| Warm colors (reds/oranges/yellows) | H ∈ [0, 100] | 66.8% |

| Pinks/Purples | H ∈ [300, 370] | 67% |

It is particularly interesting that the threshold is improved to 64.5% by just ignoring colors that are not actually displayable on modern screens. So, assuming (though sadly this is not an assumption that currently holds true) that browsers prioritize preserving lightness when gamut mapping, we could use 64.5% and still guarantee WCAG compliance.

You can even turn this into a utility class that you can combine with different thesholds:

.contrast-color {

--l: clamp(0, (var(--l-threshold, 0.623) / l - 1) * infinity, 1);

color: oklch(from var(--color) var(--l) 0 h);

}

.pink {

–l-threshold: 0.67;

}

Conclusion & Future work

Putting it all together, including a fallback, as well as a “fall forward” that uses contrast-color(),

the utility class could look like this:

.contrast-color {

/* Fallback for browsers that don't support RCS */

color: white;

text-shadow: 0 0 .05em black, 0 0 .05em black, 0 0 .05em black, 0 0 .05em black;

@supports (color: oklch(from red l c h)) {

–l: clamp(0, (var(–l-threshold, 0.623) / l - 1) * infinity, 1);

color: oklch(from var(–color) var(–l) 0 h);

text-shadow: none;

}

@supports (color: contrast-color(red)) {

color: contrast-color(var(–color));

text-shadow: none;

}

}

This is only a start. I can imagine many directions for improvement such as:

- Since RCS allows us to do math with any of the color components in any color space, I wonder if there is a better formula that still be implemented in CSS and balances readability and compliance even better. E.g. I’ve had some chats with Andrew Somers (creator of APCA) right before publishing this, which suggest that doing math on luminance (the Y component of XYZ) instead could be a promising direction.

- We currently only calcualte thresholds for white and black text.

However, in real designs, we rarely want pure black text,

which is why

contrast-color()only guarantees a “very light or very dark color” unless themaxkeyword is used. How would this extend to darker tints of the background color?

Thanks to Chris Lilley, Andrew Somers, Cory LaViska, Elika Etemad, and Tab Atkins-Bittner for their feedback on earlier drafts of this article.

However, this means that if you have never used a feature, it does not count towards your score, even if you have been aware of it for years.

It therefore felt unfair to many to report that you’ve “heard or used” X% of features, when there was no way to express that you have heard 89 out of 131 of them!

However, this means that if you have never used a feature, it does not count towards your score, even if you have been aware of it for years.

It therefore felt unfair to many to report that you’ve “heard or used” X% of features, when there was no way to express that you have heard 89 out of 131 of them!